I recently built a small web app that enables simple 'Copy & Paste' image editing based on the Paint By Example paper. This post highlights some of the interesting things that I learned along the way.

(This project is no longer hosted publicly. So, you can read about it, but can't try it out.)

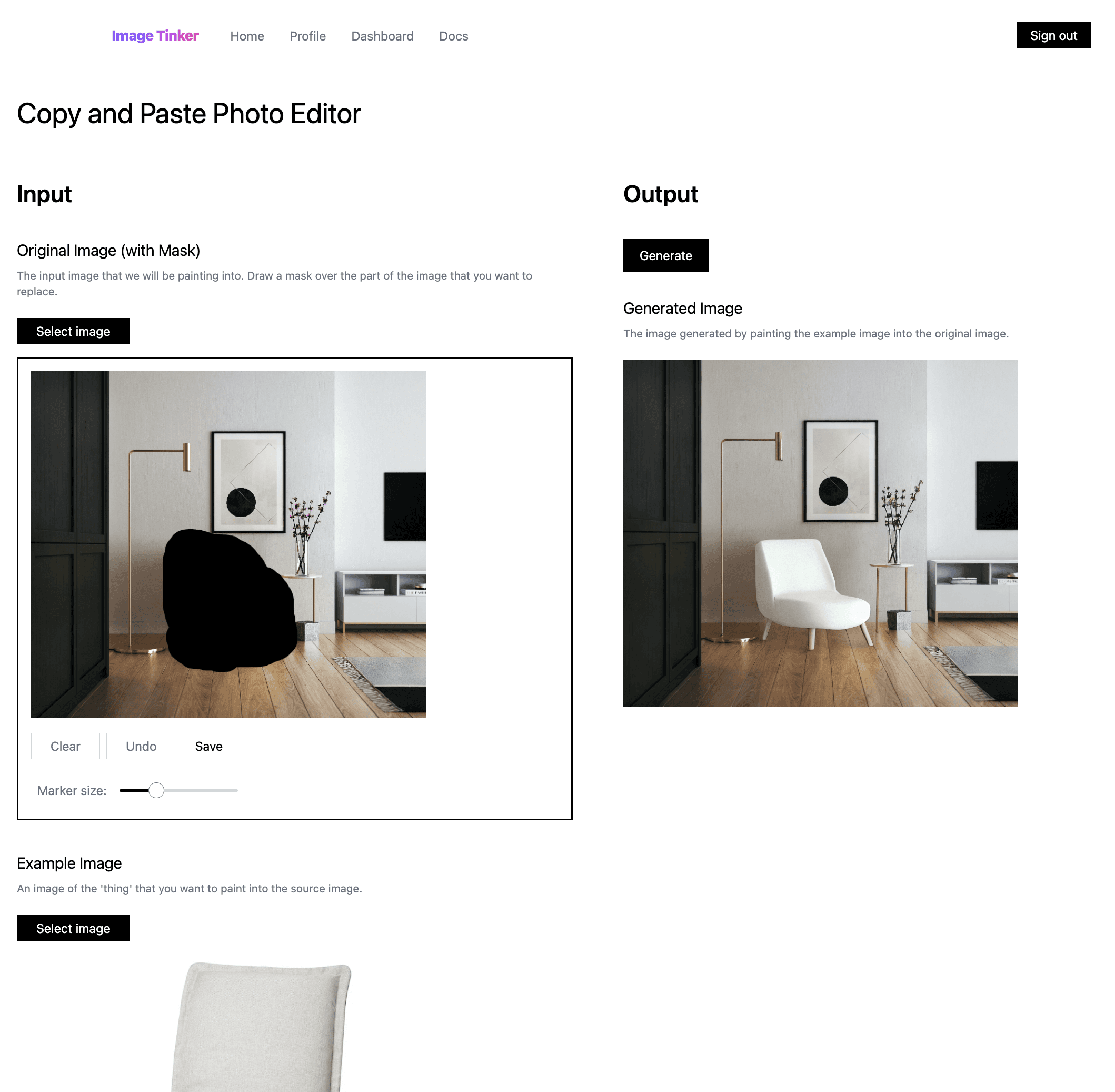

A screenshot of the Copy & Paste AI photo editing tool.

What is Paint By Example?

Paint By Example is an image diffusion model that enables concepts from an example image to be merged into an input image. The model is similar to popular prompt-guided image-to-image Stable Diffusion models, but it conditions the diffusion model on example image embeddings rather than text prompt embeddings.

I highly recommend reading the paper if you're interested in the details of the Paint By Example model.

Examples

The following examples highlight successful applications of the Paint By Example model.

Example 1: Change a chair's style

Input

Mask

Example

Output

Example of changing a chair's style.

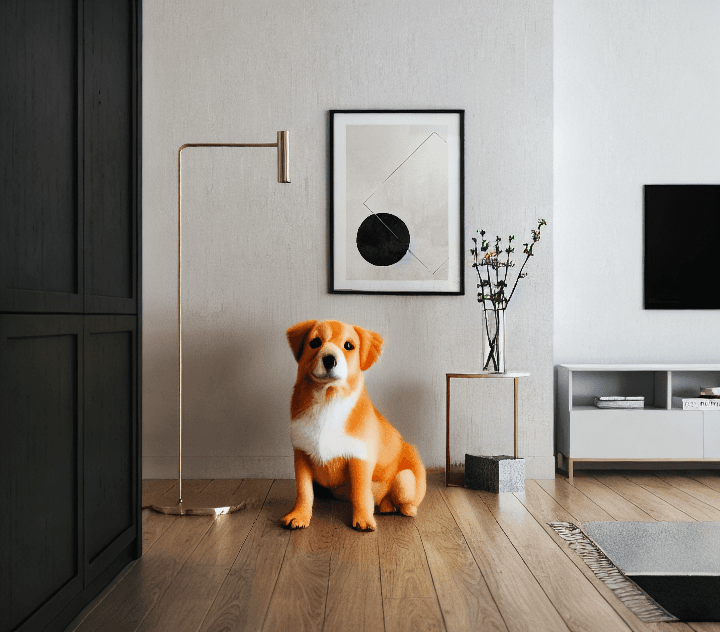

Example 2: Replace a chair with a dog

Input

Mask

Example

Output

Example of replacing a chair with a dog.

Model Properties

This section highlights some of the limitations of the Paint By Example model.

Low-Fidelity

The Paint By Example model passes the example image through a low-dimensional information bottleneck. This helps to avoid naive copy-paste artifacts, but as a consequence the model does not preserve details from the example image with high fidelity. (This is a strength for some use cases, but a weakness for others.)

Suppose, for example, that you wanted to create a promotional product photo by pasting a product photo onto a new background. This use case would be better served by a different workflow (e.g. manual cut and paste followed by a image-to-image refinement step).

Below is an example attempt to produce a product photo:

Input

Mask

Example

Output

An (failed) attempt to copy a product photo on to a different background.

Constrained by the underlying diffusion model

The Paint By Example model is constrained by the representational power of the underlying diffusion model (i.e. it can only generate concepts seen during training). As an example, if the underlying model is poor at generating photo-realistic faces, this will still be true when it is prompted with an example face.

Input

Mask

Example

Output

An attempt to generate a photo-realistic person.